With NVIDIA seemingly steaming ahead in their latest quarterly result, Apple Intelligence receiving a lukewarm response from users, Wall Street increasingly worried about the return-on-investment from the hyperscalers’ massive capital investments, stories that CIOs are struggling to find ROI for AI, and news in the last two days that Intel and Samsung are both struggling to win AI-related revenue, and that the US is introducing new export controls for semiconductor equipment to China, it can be hard to navigate the AI commercial landscape to understand who the winners and losers might be over the next year or two.

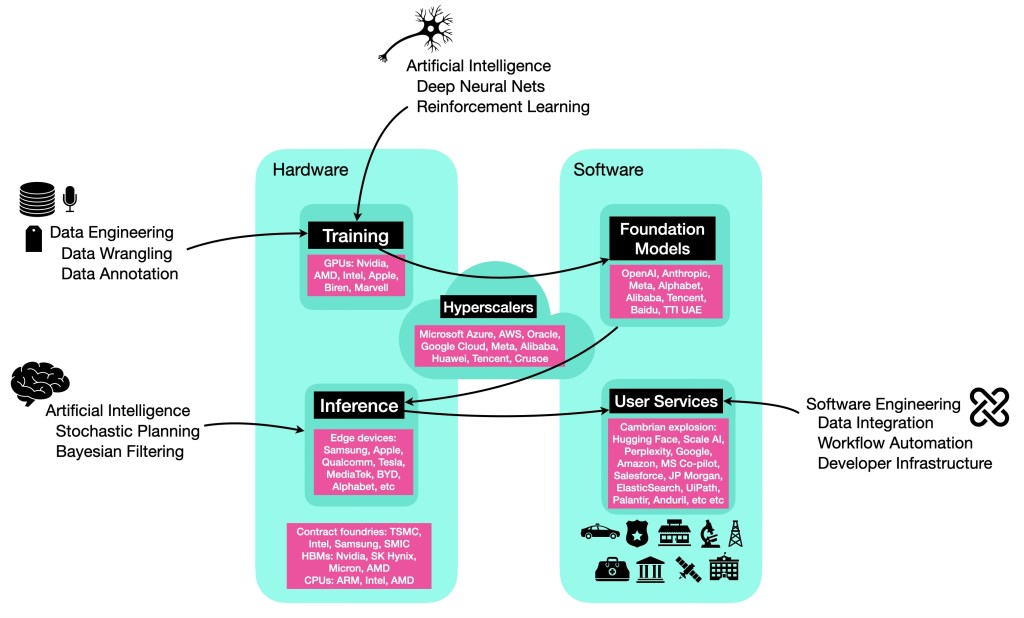

To help answer that question, I have created the following diagram that sketches out key aspects of the AI supply chain for foundation models (like large language models and their multi-modal extensions), together with a list of current technology leaders in each part of the supply chain. (Credit: This builds on a diagram from Angus Donohoo at The Intelligent Investor, augmented with my personal experience with AI technologies and vendors.) A key thing to note is that building foundation models and using foundation models are completely different propositions. The former, labelled Training in the diagram, involves the very expensive task of finding a function M from a very large class of possible deep neural networks that compresses a very large dataset well. (This is a rather involved process and, while they are many good intuitive descriptions on how LLMs works, I have only been able to find one clear mathematical description of exactly how they work in Phuong and Hutter 2022.) The latter, labelled Inference in the diagram, involves evaluating the function M on a few given data points (constructed from a user query), which is a relatively inexpensive task that almost everyone can do.

The diagram can be used to understand how the industry dynamics may shift under different scenarios. For example, we have heard that the AI Scaling Hypothesis appears not to be holding and that major players are now shifting more resources to doing better inference (or stochastic planning, technically) rather than building bigger and bigger models. The winners and losers from that scenario can be studied by looking at the above diagram as a starting point.

Up until now, the AI scaling contest has largely been a two-horse race between US and China. (As far as I know, Mistral and Falcon are the only foundational models developed from scratch outside of those two countries.) The reason is simple: the supply chain feeding the AI scaling effort requires the stitching together of the semiconductor supply chain and the big data supply chain; each of them in isolation is already filled with cornered resources dominated by only a few large commercial entities, and the intersection of the two supply chains is therefore protected by crazily large entry barriers. To illustrate this, there are really only a handful of organisations (both public and private) who have the technical knowhow, budget, and access to data and compute to build foundation models from scratch. (A pretty reliable estimate is that it costs around US$1 billion per year to build foundational models, about US$500m in data and compute and US$500m in salaries.) On the hardware side, NVIDIA has a near-monopoly on supply of GPUs because the popular CUDA developer library only works with NVIDIA GPUs. Also, NVIDIA has locked away a significant portion of TSMC’s latest chip-fabrication capacity and competitors cannot find an alternative because other contract foundries are 2-5 years behind TSMC in their manufacturing capability. In turn, the TSMC technological lead over other foundries is built on their 5-10 year head start in learning to operate costly and complex extreme ultraviolet lithography machines that essentially only Netherland’s ASML can supply. And there are many other cornered resources / bottlenecks in the 5000 companies that supply components to ASML, and the many other companies that supply raw materials to TSMC. So it’s turtles all the way down…. (See the book Chip War by Chris Miller, this CSIS report and my Short Look at Geoeconomics to get more details.)

The story is however completely different on the bottom part of the diagram, where there are very little cornered resources or other supply chain constraints. There are many technology vendors in the Inference space, and there is a bit of a Cambrian explosion in User Services space, where a thousand and one companies are building and integrating LLMs into their products.

For the vast majority of organisations who haven’t been able to play directly in the global AI scaling experiments so far, your time may have come if the next phase of the game is on coming up with better inference algorithms rather than building larger models. Every one of us can play that game of invention and product development without having to pay a billion dollar just for the buy-in.

Other relevant sources:

Here’s a venture capitalist perspective on industry dynamics, opportunities, and risks with the shift of AI scaling from pre-training of models to test-time inference.

Here’s Noam Brown on the advantages of adding search / planning to ML models like LLMs, including comparisons of scaling laws on training vs inference in different domains.

Here’s Subbarao Kambhampati on how LLMs on its own can’t really plan, and some of the ways that LLMs can and cannot be used effectively together with planners.